Episode 228

The Spectre of Meltdown

January 10th, 2018

1 hr 51 mins 56 secs

Tags

About this Episode

We review the information about Spectre & Meltdown thus far, we look at NetBSD memory sanitizer progress, Postgres on ZFS & show you a bit about NomadBSD.

This episode was brought to you by

Headlines

Meltdown Spectre

- Official Site

- Kernel-memory-leaking Intel processor design flaw forces Linux, Windows redesign

- Intel’s official response

- The Register mocks intels response with pithy annotations

- Intel’s Analysis PDF

- XKCD

- Response from FreeBSD

- FreeBSD's patch WIP

- Why Raspberry Pi isn’t vulnerable to Spectre or Meltdown

- Xen mitigation patches

- Overview of affected FreeBSD Platforms/Architectures

- Groff's response ##### We’ll cover OpenBSD, NetBSD, and DragonflyBSD’s responses in next weeks episode. *** ###The LLVM Memory Sanitizer support work in progress > In the past 31 days, I've managed to get the core functionality of MSan to work. This is an uninitialized memory usage detector. MSan is a special sanitizer because it requires knowledge of every entry to the basesystem library and every entry to the kernel through public interfaces. This is mandatory in order to mark memory regions as initialized. Most of the work has been done directly for MSan. However, part of the work helped generic features in compiler-rt.

- Sanitizers > Changes in the sanitizer are listed below in chronological order. Almost all of the changes mentioned here landed upstream. A few small patches were reverted due to breaking non-NetBSD hosts and are rescheduled for further investigation. I maintain these patches locally and have moved on for now to work on the remaining features.

- NetBSD syscall hooks > I wrote a large patch (815kb!) adding support for NetBSD syscall hooks for use with sanitizers.

- NetBSD ioctl(2) hooks > Similar to the syscall hooks, there is need to handle every ioctl(2) call. I've created the needed patch, this time shorter - for less than 300kb.

- New patches still pending for upstream review > There are two corrections that I've created, and they are still pending upstream for review:

- Add MSan interceptor for fstat(2)](https://reviews.llvm.org/D41637)

- Correct the setitimer interceptor on NetBSD)](https://reviews.llvm.org/D41502) > I've got a few more local patches that require cleanup before submitting to review.

- NetBSD basesystem corrections

- Sanitizers in Go

- The MSan state as of today

- Solaris support in sanitizers > I've helped the Solaris team add basic support for Sanitizers (ASan, UBsan). This does not help NetBSD directly, however indirectly it improves the overall support for non-Linux hosts and helps to catch more Linuxisms in the code.

- Plan for the next milestone > I plan to continue the work on MSan and correct sanitizing of the NetBSD basesystem utilities. This mandates me to iterate over the basesystem libraries implementing the missing interceptors and correcting the current support of the existing ones. My milestone is to build all src/bin programs against Memory Sanitizer and when possible execute them cleanly.

- This work was sponsored by The NetBSD Foundation.

- The NetBSD Foundation is a non-profit organization and welcomes any donations to help us continue funding projects and services to the open-source community. Please consider visiting the following URL, and chip in what you can: http://netbsd.org/donations/#how-to-donate *** ##News Roundup ###MWL’s 2017 Wrap-Up > The obvious place to start is my 2016 wrap-up post](https://blather.michaelwlucas.com/archives/2822), where I listed goals for 2017. As usual, these goals were wildly delusional. > The short answer is, my iron was back up to normal. My writing speed wasn’t, though. I’d lost too much general health, and needed hard exercise to recover it. Yes, writing requires physical endurance. Maintaining that level of concentration for several hours a day demands a certain level of blood flow to the brain. I could have faked it in a day job, but when self-employed as an artist? Not so much. > Then there’s travel. I did my usual BSDCan trip, plus two educational trips to Lincoln City, Oregon. The current political mayhem convinced me that if I wanted to hit EuroBSDCon any time in the next few years, I should do it in the very near future. So I went to Paris, where I promptly got pickpocketed. (Thankfully, they didn’t get my passport.) I was actively writing the third edition of Absolute FreeBSD, so I visited BSDCam in Cambridge to get the latest information and a sense of where FreeBSD was going. I also did weekends at Kansas LinuxFest (because they asked and paid for my trip) and Penguicon. > (Because people will ask: why EuroBSDCon and not AsiaBSDCon? A six-hour transatlantic flight requires that I take a substantial dose of heavy-grade tranquilizers. I’m incapable of making intelligent decisions while on those drugs, or for several hours afterward. They don’t last long enough for twelve-hour flight to Japan, so I need to be accompanied by someone qualified to tell me when I need to take the next dose partway through the flight. This isn’t a predetermined time that I can set an alarm for; it depends on how the clonazepam affects me at those altitudes. A drug overdose while flying over the North Pole would be bad. When I can arrange that qualified companion, I’ll make the trip.) > I need most of the preceding week to prepare for long trips. I need the following week to recover from time shifts and general exhaustion. Additionally, I have to hoard people juice for a few weeks beforehand so I can deal with folks during these expeditions. Travel disrupts my dojo time as well, which impacts my health. > Taken as a whole: I didn’t get nearly as much done as I hoped.

- I wrote more stories, but Kris Rusch bludgeoned me into submitting them to trad markets. (The woman is a brute, I tell you. Cross her at your peril.)

- Among my 2017 titles, my fiction outsold the tech books. No, not Prohibition Orcs–all four of the people who buy those love them, but the sales tell me I’ve done something wrong with those tales.

- My cozy mystery git commit murder outsold Relayd and Httpd Mastery.

- But what outdid them both, as well as most of my older books? What title utterly dominated my sales for the last quarter of the year? It was of course, my open source software political satire disguised as porn Savaged by Systemd: an Erotic Unix Encounter. > I can’t believe I just wrote that paragraph.

- The good news is, once I recovered from EuroBSDCon, my writing got better.

- I finished Absolute FreeBSD, 3rd edition and submitted it to the publisher.

- I wrote the second edition of SSH Mastery (no link, because you can’t order it yet.)

- I’m plowing through git sync murder, the sequel to git commit murder. I don’t get to see the new Star Wars movie until I finish GSM, so hopefully that’ll be this month.

- All in all, I wrote 480,200 words in 2017. Most of that was after September. It’s annoyingly close to breaking half a million, but after 2016’s scandalous 195,700, I’ll take it. *** ###PG Phriday: Postgres on ZFS > ZFS is a filesystem originally created by Sun Microsystems, and has been available for BSD over a decade. While Postgres will run just fine on BSD, most Postgres installations are historically Linux-based systems. ZFS on Linux has had much more of a rocky road to integration due to perceived license incompatibilities. > As a consequence, administrators were reluctant or outright refused to run ZFS on their Linux clusters. It wasn’t until OpenZFS was introduced in 2013 that this slowly began to change. These days, ZFS and Linux are starting to become more integrated, and Canonical of Ubuntu fame even announced direct support for ZFS in their 16.04 LTS release. > So how can a relatively obscure filesystem designed by a now-defunct hardware and software company help Postgres? Let’s find out!

- Eddie waited til he finished high school

> Old server hardware is dirt cheap these days, and make for a perfect lab for testing suspicious configurations. This is the server we’ll be using for these tests for those following along at home, or want some point of reference:

Dell R710 x2 Intel X5660 CPUs, for up to 24 threads 64GB RAM x4 1TB 7200RPM SATA HDDs H200 RAID card configured for Host Bus Adapter (HBA) mode 250GB Samsung 850 EVO SSD> The H200 is particularly important, as ZFS acts as its own RAID system. It also has its own checksumming and other algorithms that don’t like RAID cards getting in the way. As such, we put the card itself in a mode that facilitates this use case. > Due to that, we lose out on any battery-backed write cache the RAID card might offer. To make up for it, it’s fairly common to use an SSD or other persistent fast storage to act both as a write cache, and a read cache. This also transforms our HDDs into hybrid storage automatically, which is a huge performance boost on a budget. - She had a guitar and she taught him some chords > First things first: we need a filesystem. This hardware has four 1TB HDDs, and a 250GB SSD. To keep this article from being too long, we’ve already placed GPT partition tables on all the HDDs, and split the SSD into 50GB for the OS, 32GB for the write cache, and 150GB for the read cache. A more robust setup would probably use separate SSDs or a mirrored pair for these, but labs are fair game.

- They moved into a place they both could afford > Let’s start by getting a performance baseline for the hardware. We might expect peak performance at 12 or 24 threads because the server has 12 real CPUs and 24 threads, but query throughput actually topped out at concurrent 32 processes. We can scratch our heads over this later, for now, we can consider it the maximum capabilities of this hardware. Here’s a small sample: ``` $> pgbench -S -j 32 -c 32 -M prepared -T 20 pgbench

...

tps = 264661.135288 (including connections establishing)

tps = 264849.345595 (excluding connections establishing)

> So far, this is pretty standard behavior. 260k prepared queries per second is great read performance, but this is supposed to be a filesystem demonstration. Let’s get ZFS involved.

+ The papers said Ed always played from the heart

> Let’s repeat that same test with writes enabled. Once that happens, filesystem syncs, dirty pages, WAL overhead, and other things should drastically reduce overall throughput. That’s an expected result, but how much are we looking at, here?

$> pgbench -j 32 -c 32 -M prepared -T 10 pgbench

...

tps = 6153.877658 (including connections establishing)

tps = 6162.392166 (excluding connections establishing)

> SSD cache or not, storage overhead is a painful reality. Still, 6000 TPS with writes enabled is a great result for this hardware. Or is it? Can we actually do better?

> Consider the Postgres full_page_writes parameter. Tomas Vondra has written about it in the past as a necessity to prevent WAL corruption due to partial writes. The WAL is both streaming replication and crash recovery, so its integrity is of utmost importance. As a result, this is one parameter almost everyone should leave alone.

> ZFS is Copy on Write (CoW). As a result, it’s not possible to have a torn page because a page can’t be partially written without reverting to the previous copy. This means we can actually turn off full_page_writes in the Postgres config. The results are some fairly startling performance gains:

$> pgbench -j 32 -c 32 -M prepared -T 10 pgbench

tps = 10325.200812 (including connections establishing)

tps = 10336.807218 (excluding connections establishing)

> That’s nearly a 70% improvement. Due to write amplification caused by full page writes, Postgres produced 1.2GB of WAL files during a 1-minute pgbench test, but only 160MB with full page writes disabled.

> To be fair, a 32-thread pgbench write test is extremely abusive and certainly not a typical usage scenario. However, ZFS just ensured our storage a much lower write load by altering one single parameter. That means the capabilities of the hardware have also been extended to higher write workloads as IO bandwidth is not being consumed by WAL traffic.

+ They both met movie stars, partied and mingled

> Astute readers may have noticed we didn’t change the default ZFS block size from 128k to align with the Postgres default of 8kb.

> As it turns out, the 128kb blocks allow ZFS to better combine some of those 8kb Postgres pages to save space. That will allow our measly 2TB to go a lot further than is otherwise possible.

> Please note that this is not de-duplication, but simple lz4 compression, which is nearly real-time in terms of CPU overhead. De-duplication on ZFS is currently an uncertain bizzaro universe populated with misshapen horrors crawling along a broken landscape. It’s a world of extreme memory overhead for de-duplication tables, and potential lost data due to inherent conflicts with the CoW underpinnings. Please don’t use it, let anyone else use it, or even think about using it, ever.

+ They made a record and it went in the chart

> We’re still not done. One important aspect of ZFS as a CoW filesystem, is that it has integrated snapshots.

> Consider the scenario where a dev is connected to the wrong system and drops what they think is a table in a QA environment. It turns out they were in the wrong terminal and just erased a critical production table, and now everyone is frantic.

+ The future was wide open

> It’s difficult to discount an immediately observable reduction in write overhead. Snapshots have a multitude of accepted and potential use cases, as well. In addition to online low-overhead compression, and the hybrid cache layer, ZFS boasts a plethora of features we didn’t explore.

> Built-in checksums with integrated self-healing suggest it isn’t entirely necessary to re-initialize an existing Postgres instance to enable checksums. The filesystem itself ensures checksums are validated and correct, especially if we have more than one drive resource in our pool. It even goes the extra mile and actively corrects inconsistencies when encountered.

> I immediately discounted ZFS back in 2012 because the company I worked for at the time was a pure Linux shop. ZFS was only available using the FUSE driver back then, meaning ZFS only worked through userspace with no real kernel integration. It was fun to tinker with, but nobody sane would use that on a production server of any description.

> Things have changed quite drastically since then. I’ve stopped waiting for btrfs to become viable, and ZFS has probably taken the throne away from XFS as my filesystem of choice. Future editions of the Postgres High Availability Cookbook will reflect this as well.

> Postgres MVCC and ZFS CoW seem made for each other. I’m curious to see what will transpire over the next few years now that ZFS has reached mainstream acceptance in at least one major Linux distribution.

***

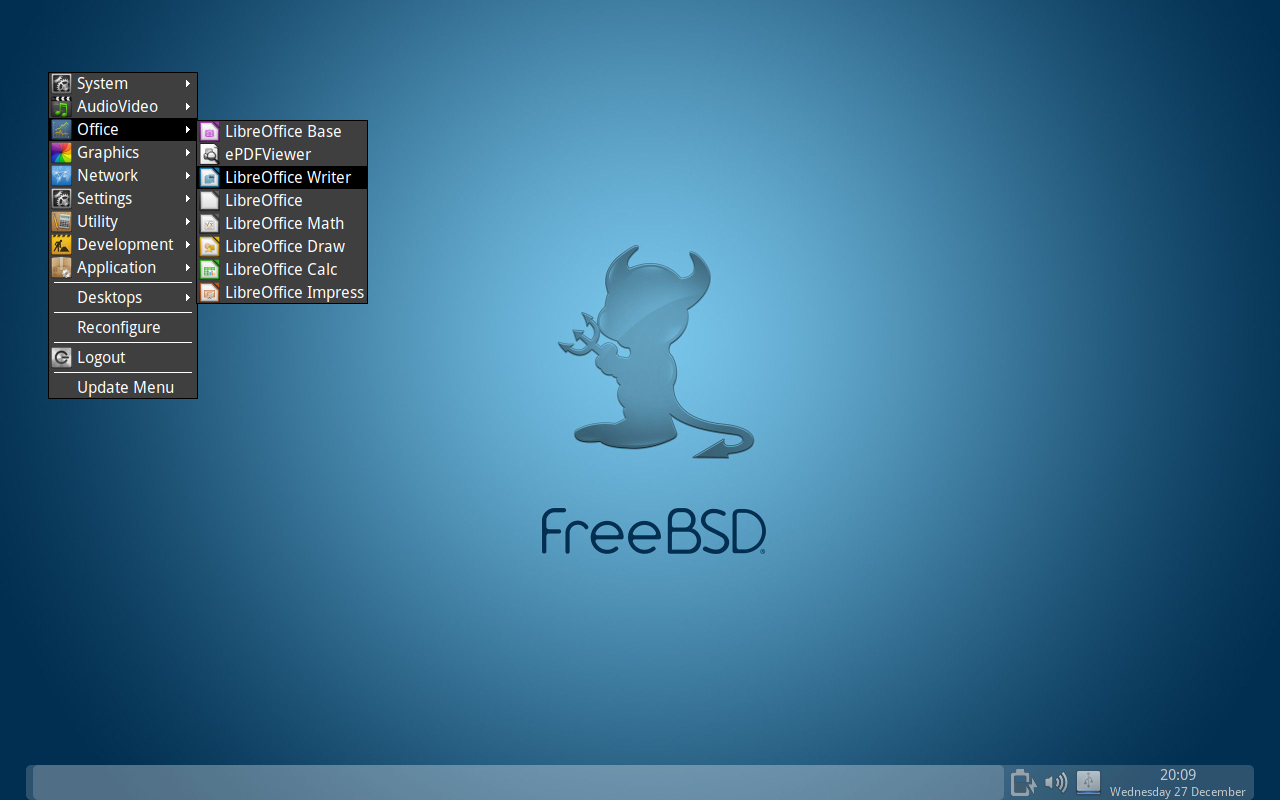

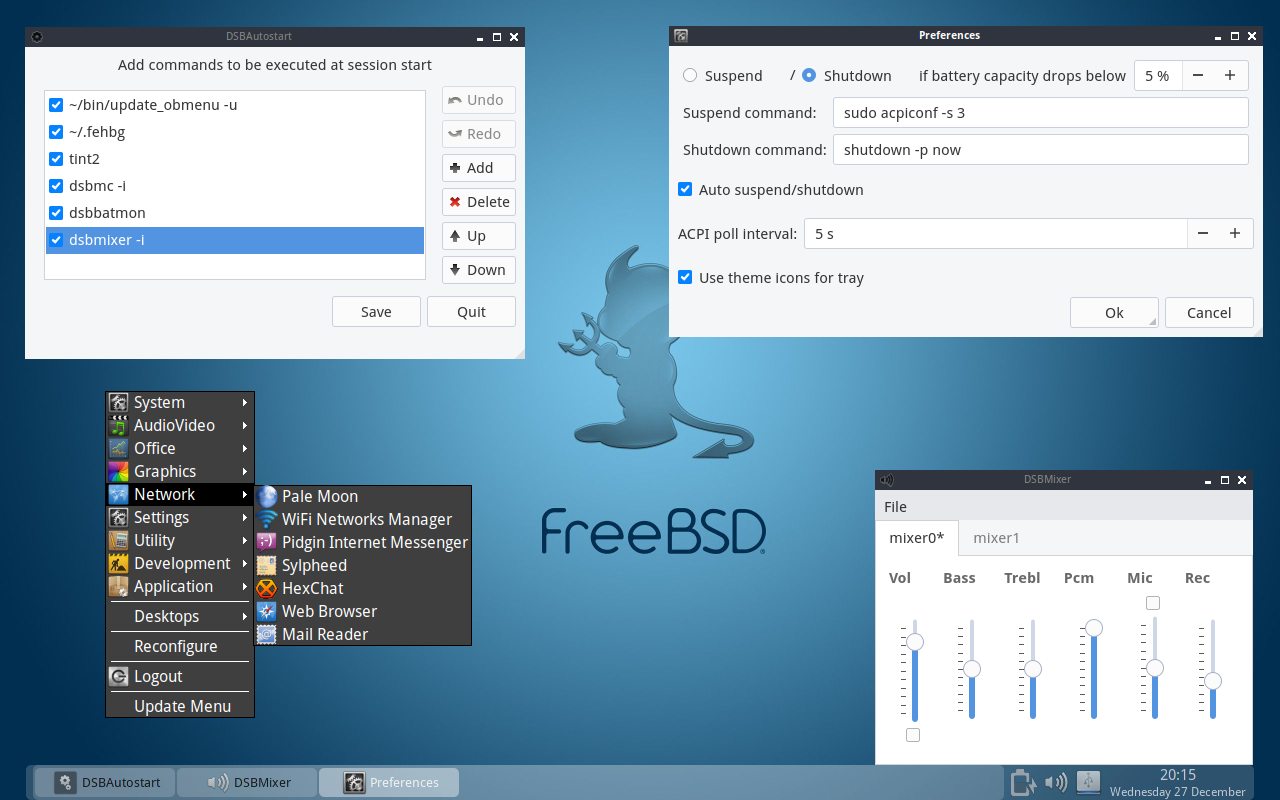

###[NomadBSD](https://github.com/mrclksr/NomadBSD)

+ About

> NomadBSD is a live system for flash drives, based on FreeBSD.

+ Screenshots

+ Requirements for building the image

A recent FreeBSD system

+ Requirements for running NomadBSD

* A 4GB (or more) flash drive

* A System capable running FreeBSD 11.1 (amd64)

+ Building the image

~~ csh

# make image

~~

+ Writing the image to an USB memory stick

~~ csh

# dd if=nomadbsd.img of=/dev/da0 bs=10240 conv=sync

~~

+ Resize filesystem to use the entire USB memory

+ Boot NomadBSD into single user mode, and execute:

~~

# gpart delete -i 2 da0s1

# gpart resize -i 1 da0

# gpart commit da0s1

~~

+ Determine the partition size in megabytes using

````fdisk da0````

+ and calculate the remaining size of da0s1a:

````<REMAIN> = <SIZE OF PARTITION IN MB> - <DESIRED SWAP SIZE IN MB>````.

~~

# gpart resize -i 1 -s <REMAIN>M da0s1

# gpart add -t freebsd-swap -i 2 da0s1

# glabel label NomadBSDsw da0s1b

# service growfs onestart

# reboot

~~

+ [FreeBSD forum thread](https://forums.freebsd.org/threads/63888/)

+ [A short screen capture video of the NomadBSD system running in VirtualBox](https://freeshell.de/~mk/download/nomad_capture.mp4)

***

##Beastie Bits

+ [Coolpkg, a package manager inspired by Nix for OpenBSD](https://github.com/andrewchambers/coolpkg)

+ [zrepl - ZFS replication](https://zrepl.github.io/)

+ [OpenBSD hotplugd automount script](https://bijanebrahimi.github.io/blog/openbsd-hotplugd-scripting.html)

+ [Ancient troff sources vs. modern-day groff](https://virtuallyfun.com/2017/12/22/learn-ancient-troff-sources-vs-modern-day-groff/)

+ [Paypal donation balance and status.. thanks everyone!](http://lists.dragonflybsd.org/pipermail/users/2017-December/313752.html)

+ [Supervised FreeBSD rc.d script for a Go daemon (updated in last few days)](https://redbyte.eu/en/blog/supervised-freebsd-init-script-for-go-deamon/)

+ [A Brief History of sed](https://blog.sourcerer.io/a-brief-history-of-sed-6eaf00302ed)

+ [Flamegraph: Why does my AWS instance boot so slow?](http://www.daemonology.net/timestamping/tslog-c5.4xlarge.svg)

***

##Feedback/Questions

+ Jeremy - [Replacing Drive in a Zpool](http://dpaste.com/319593M#wrap)

+ [Dan’s Blog ](https://dan.langille.org/2017/08/16/swapping-5tb-in-3tb-out/)

+ Tim - [Keeping GELI key through reboot](http://dpaste.com/11QTA06)

+ Brian - [Mixing 2.5 and 3.5 drives](http://dpaste.com/2JQVD10#wrap)

+ Troels - [zfs swap on FreeBSD](http://dpaste.com/147WAFR#wrap)

***